GPU Scarcity is Real.

Waste is Optional.

Stop paying for idle GPUs. Control Kubernetes GPU allocation for AI workloads.

Automatic GPU Detection and Release

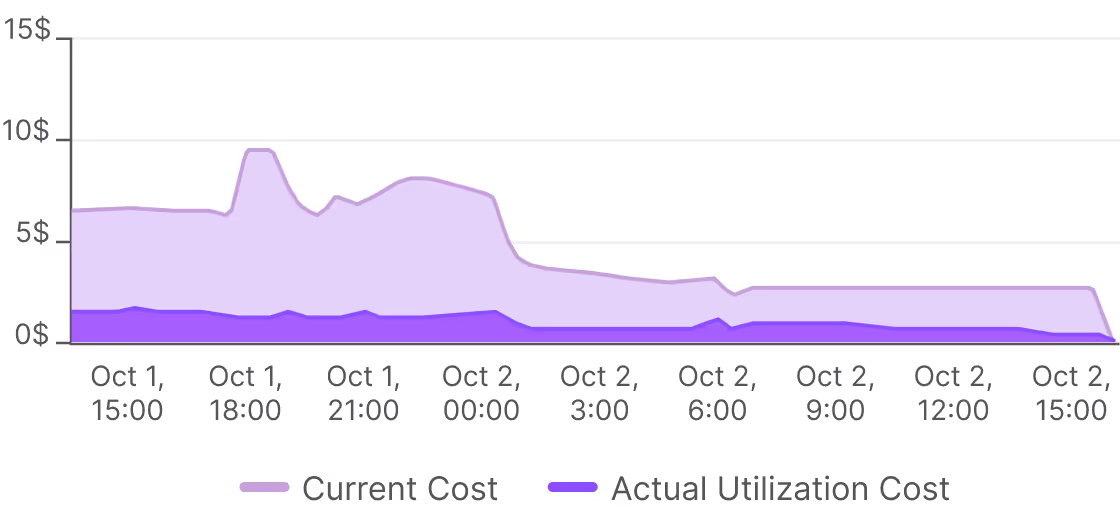

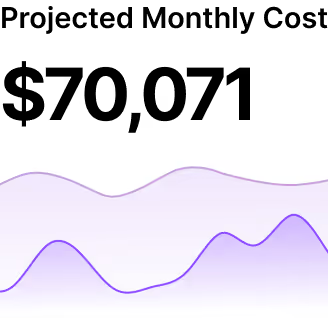

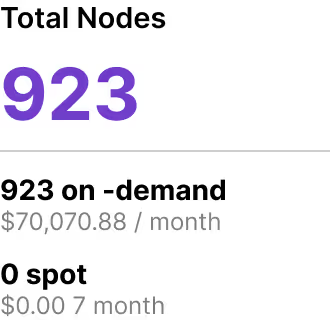

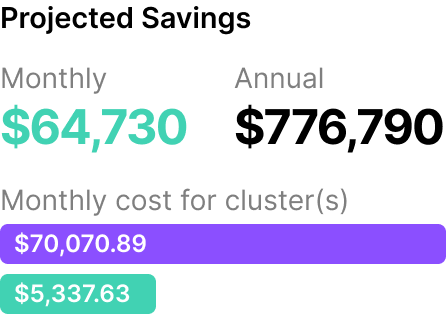

GPUs are expensive, scarce, and frequently over-provisioned for AI and ML workloads. Teams allocate conservatively leaving GPUs idle between jobs or sitting unused during traffic lulls. Manual cleanup is error-prone.

The result? GPU spend driven by fear and guesswork, not utilization.

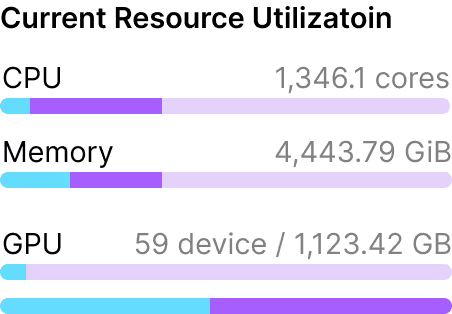

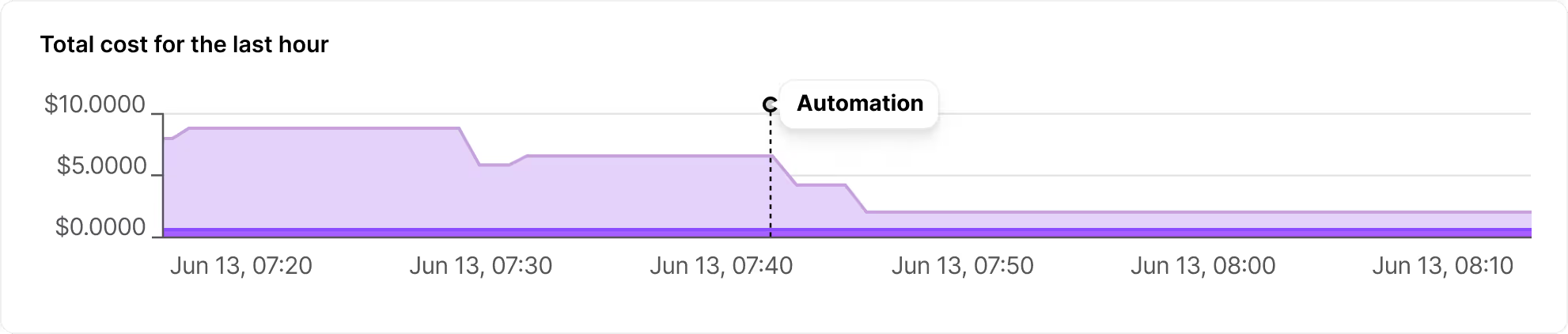

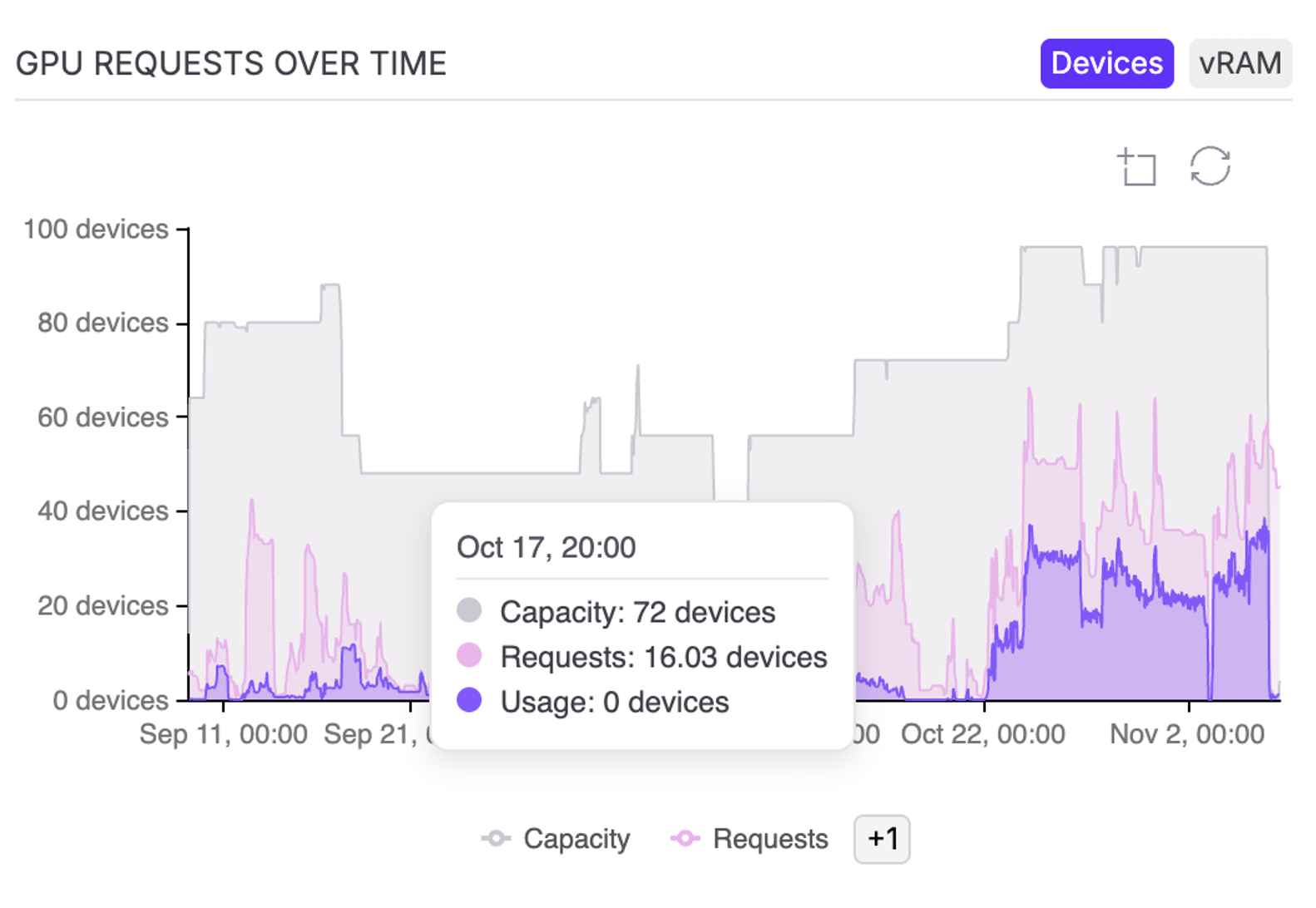

DevZero continuously monitors GPU allocation and actual usage across your Kubernetes clusters. When GPUs are allocated but unused, the platform automatically releases them based on your policies.

The system identifies three key waste patterns:

- ML training jobs that complete and leave GPUs idle

- AI inference endpoints with warm pools consuming capacity during low traffic

- Interactive notebooks left running after work ends

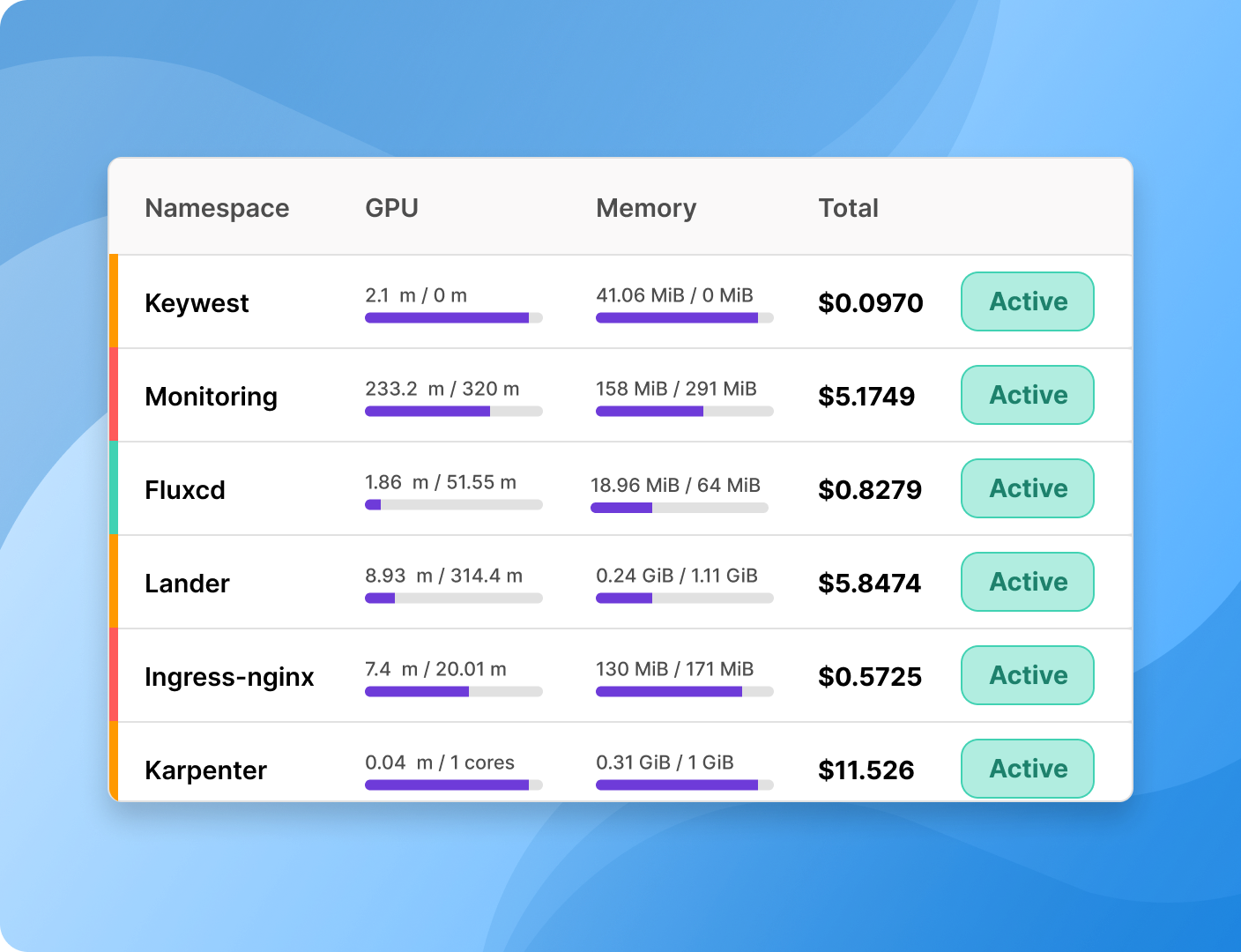

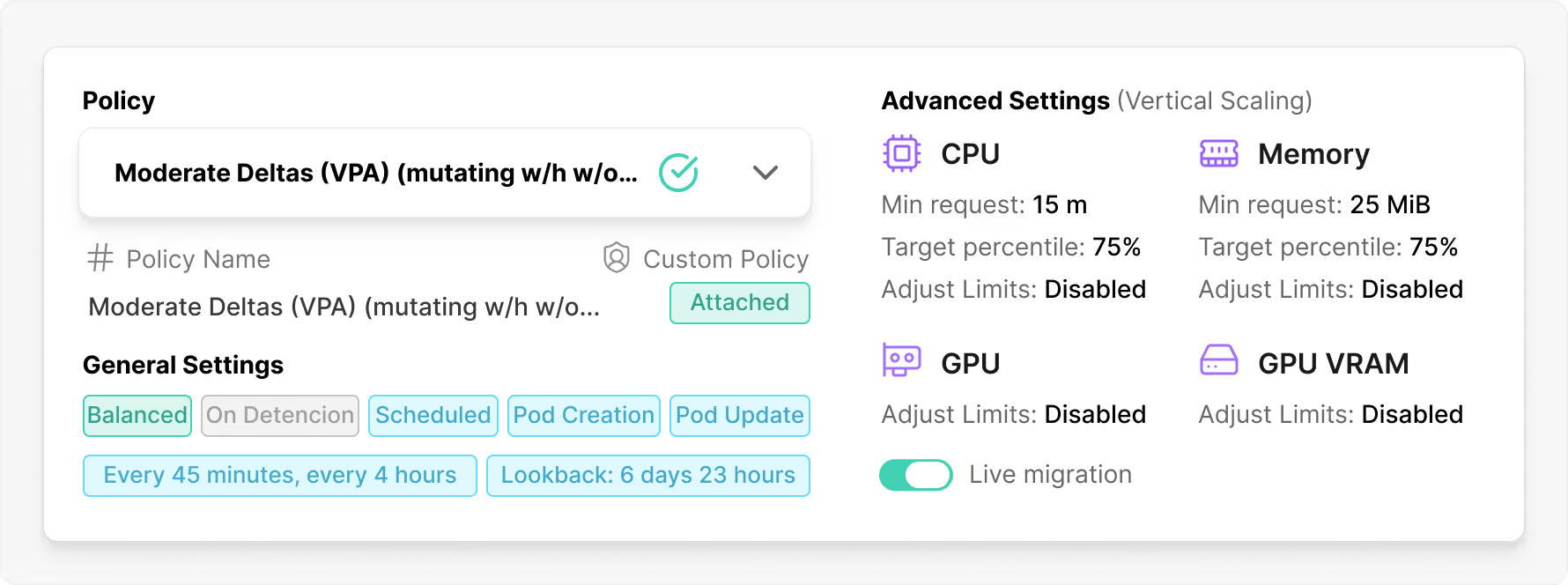

You set the rules, DevZero executes them. Define allocation duration, cleanup triggers, and which workloads can access GPU resources at the cluster, namespace, or workload level.

Beyond Node Scaling: Granular GPU Tracking

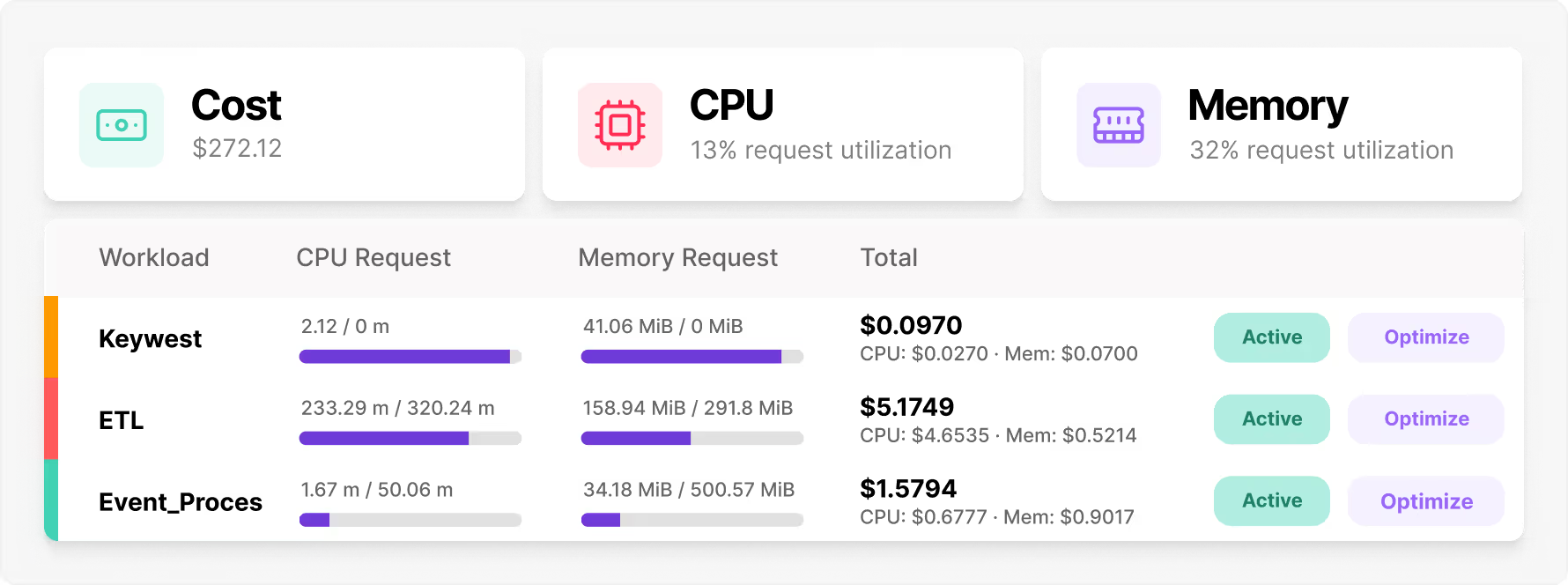

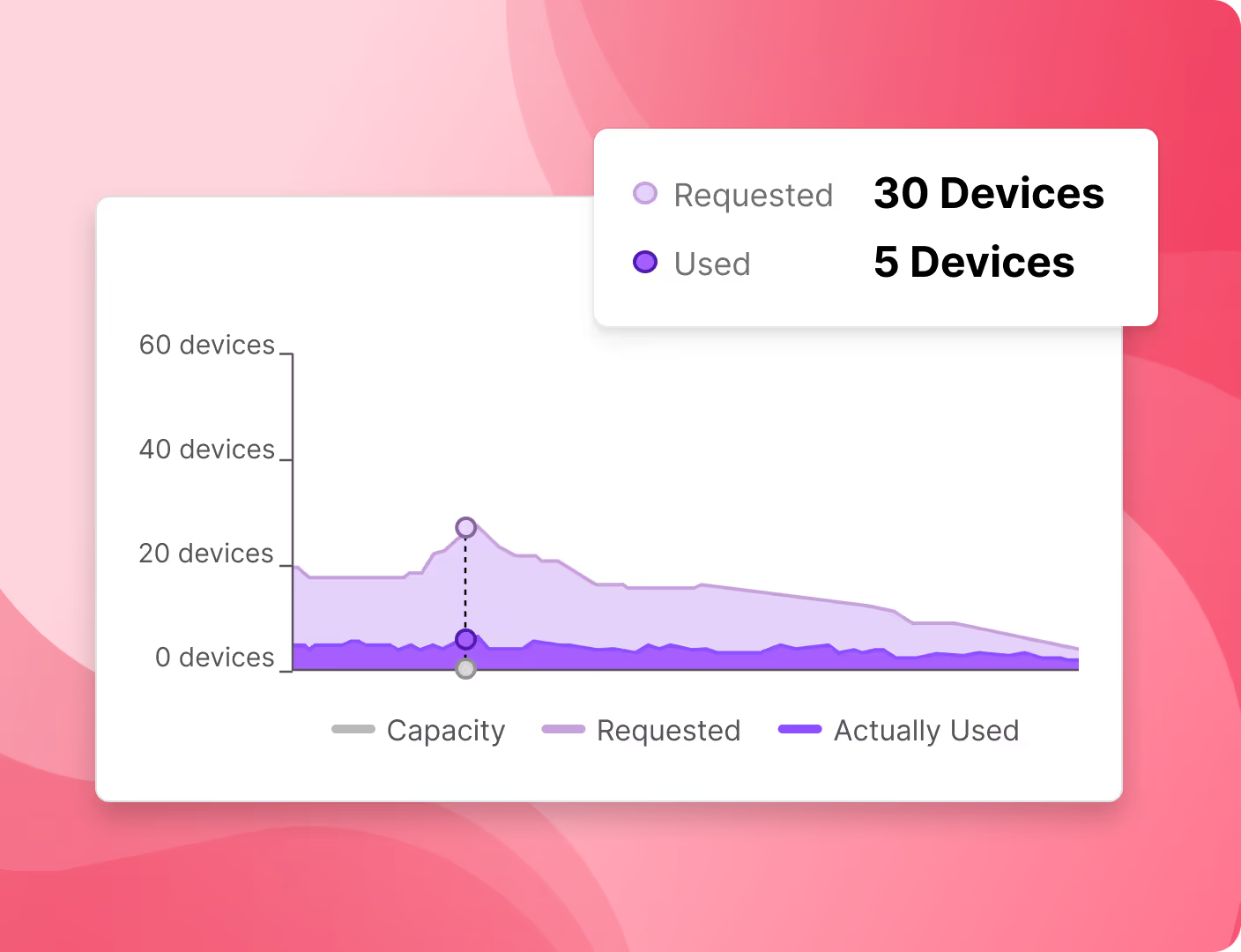

Traditional GPU management operates at the node level, missing significant waste. DevZero provides true workload-level optimization by monitoring individual GPU allocations and releasing them when specific jobs complete or go idle, not just when entire nodes are empty.

Node-level autoscalers scale down empty nodes. But a node with one small workload holding a full GPU won't scale down. DevZero releases that GPU allocation while the node remains active.

This captures waste across all AI workload patterns:

- Batch model training (high waste before and after runs)

- AI inference serving (warm pools during off-peak)

- Exploratory ML work (notebooks left running)

DevZero complements tools like Karpenter and KEDA without replacing them. While those handle node capacity, DevZero optimizes GPU allocations per workload. Many customers run both, achieving deeper cost reduction through combined optimization.

Unlocking Existing GPU Capacity

Most teams don't need more GPUs. They need better utilization of existing capacity. At typical 20-30% utilization, you pay for 100%. For organizations constrained by GPU availability or budget, optimization means more work gets done without expanding infrastructure.

DevZero delivers two critical outcomes for GPU infrastructure:

- Control AI costs by eliminating waste from idle GPUs across model training, inference serving, and exploratory workloads

- Do more with existing capacity so your GPUs can handle more AI workloads without additional hardware

GPUs are treated as dynamic resources, not static infrastructure. Allocated when needed, released when idle, managed continuously by policy. No manual cleanup required. Just GPU spend aligned to actual workload behavior.

How it Works

Eliminate GPU Waste with

Intelligent Automation

DevZero eliminates GPU waste through automated idle detection and policy-driven lifecycle management. No app changes. Just better utilization and controlled costs.

Frequently asked questions

DevZero continuously monitors GPU allocation and actual usage patterns across your Kubernetes clusters running AI/ML workloads. When GPUs are allocated but show no activity, sit idle between model training runs, or remain reserved after inference jobs complete, the platform identifies them based on your policy settings and automatically releases them.

DevZero supports all GPU workload types in Kubernetes: ML model training workloads (batch-oriented, fixed duration), AI inference workloads (user-facing, latency-sensitive), and interactive workloads (notebooks, experimentation, ML development). The platform addresses idle capacity across all these patterns.

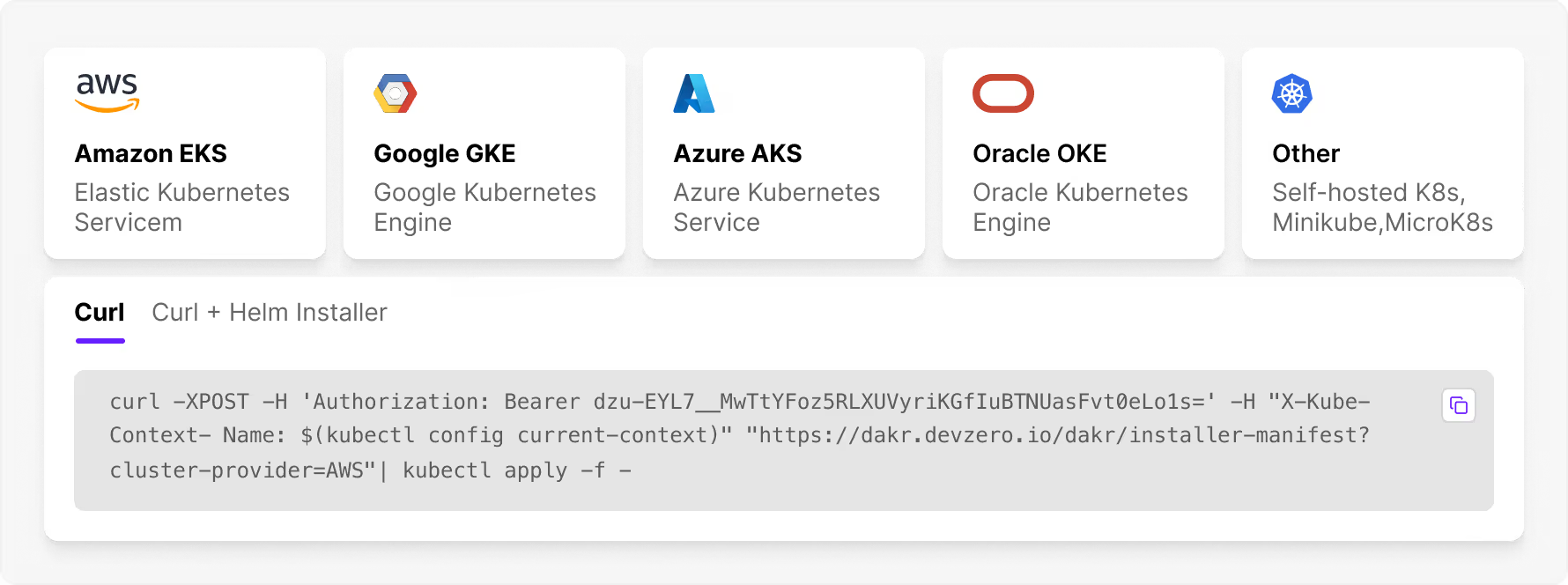

DevZero begins collecting telemetry data immediately after installation. Within hours, you'll have visibility into GPU utilization patterns and idle capacity. Policy-driven optimizations can be applied within days, as soon as you're comfortable with the recommendations.

No. DevZero releases GPUs only when they are idle or no longer needed. Active AI training jobs and inference workloads maintain full GPU access. The platform is designed to eliminate waste without impacting performance or introducing latency to running ML models.

Absolutely. Set policies at cluster, namespace, or workload level. Define which AI/ML workloads can use GPUs, how long allocations persist for training vs. inference, and when resources should be released. You maintain complete visibility and control throughout the optimization process.

Yes. DevZero complements rather than replaces autoscalers. While Karpenter and similar tools focus on node-level scaling, DevZero operates at the workload level, optimizing GPU allocation based on actual usage patterns. Many customers use both together for comprehensive optimization.

DevZero operators only gather resource utilization data, specifically compute, memory and network, as well as workload names and type. We do not have access to logs or application specific data. Moreover, our cost monitoring operator is read-only.